Email open rate is important. After all, if someone doesn’t open your email, they can’t read it.

A/B testing your email subject lines is one of the best ways to optimize your email marketing efforts.

But what should you split test?

In today’s post, I’ve compiled 14 subject-line split testing ideas for you to try in your next campaign.

But first, a warning.

Email open rate is important, but it’s just one number. If you get a 100% open rate, but no clicks, that’s not a good email.

The easiest way to understand this is that statistically, the best email subject line is no subject line at all.

Sidekick found that emails with no subject line were opened 8% more than the rest.

I don’t recommend sending all your emails with empty subject lines…

When you A/B test, it’s important you measure more than just open rate, ideally a conversion rate as well.

Now on to the tests.

By the end of this post, you’ll over a dozen subject line split test ideas that will keep you busy for months, if not years.

More importantly, these split tests will help you optimize your emails to generate more engagement, traffic, and revenue.

Let’s get to it.

Try These 14 Data-Backed Subject Line Split Testing Ideas Today

This article is quite in-depth. To make life easier, I’ve broken each idea down in more detail. Click a link below to jump to a particular section of interest.

14. Gratitude vs. No Gratitude

Let’s look at each idea in more detail.

Table of Contents:

- 1. Name vs. No Name

- 2. First vs. Second Person

- 3. Urgency vs. No Urgency

- 4. Long vs. Short

- 5. Vague vs. Specific Benefits

- 6. Content Type Specified

- 7. Free vs. Synonyms

- 8. Emojis vs. No Emojis

- 9. Sentence vs. Title Case

- 10. Capitalization

- 11. Exclamation Points

- 12. Hyphens vs. Colons

- 13. Vague vs. Specific Copy

- 14. Gratitude vs. No Gratitude

1. Name vs. No Name

If you ask for subscribers’ names, try using their names not just in the email body, but in the subject line as well.

MailChimp did an extensive study on email open rates that I’ll be referring to multiple times in this post.

They looked at 24 billion emails across multiple industries and recorded a variety of metrics.

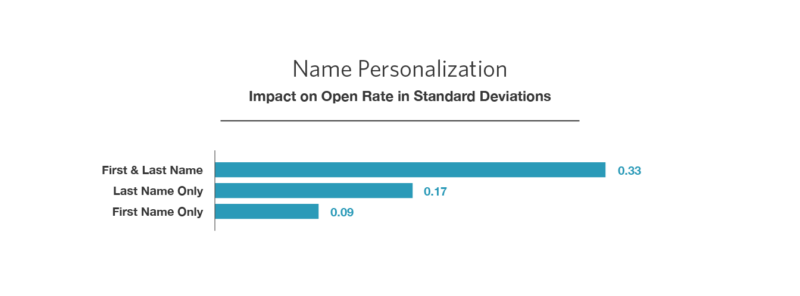

One of their findings was that including any variation of a name improved open rates.

The most effective name type to include was a first and last name.

Including just the last name was the second best way of personalizing an email in the subject line. Although it’s safe to assume that there was a “Mr.”, “Ms.”, or similar before it.

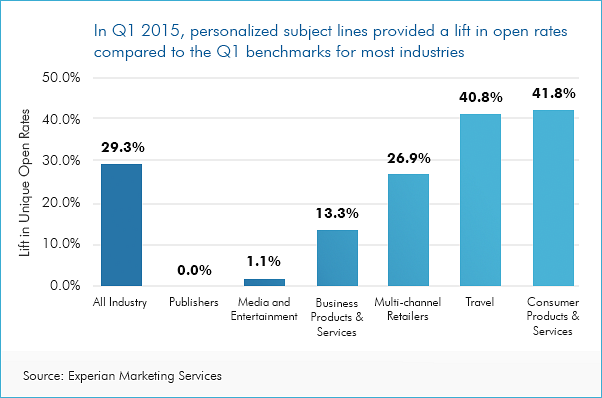

This is something that Experian Marketing also studied.

They also found that overall, personalization has a positive effect on email open rates. In fact, it helps in almost every industry.

One study found personalization leds to an average open rate increase of 29.3%.

Based on the data from MailChimp and Experian, it’s clear that this is an impactful tactic that should be tested when possible.

Action Item: Including someone’s name in the subject line can help grab a reader’s attention and attract clicks. Do a split test where one subject line starts the reader’s name, and one omits it.

For example:

- Here are 14 ways to split test email subject lines

- [Name], Here are 14 ways to split test email subject lines.

2. First Person vs. Second Person

For some reason, a lot of the good writing skills that we use to write email bodies and blog posts go out the window when writing a subject line.

Most notably is the lack of first-person language.

No one wants to feel like they receive the same email as thousands of others. We want to feel like the sender put some time and effort into crafting an email that we’d enjoy.

In general, there are 3 types of grammar perspectives:

The first-person is the most personal grammar perspective, followed by the second person, and then the third person.

At first glance, you might think that using words like “you” (second person) would help the email recipient to see the email more like a conversation.

But the data doesn’t quite support that.

Sidekick found that emails that had “you” in the subject line were opened 5% less than without it.

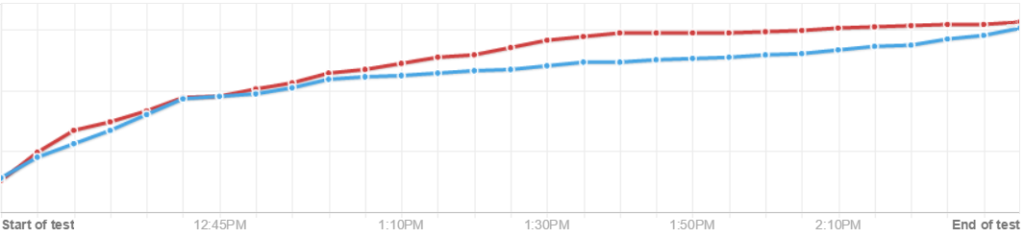

Optimizely also tested whether first or second person subject lines were better.

They used the following subject lines (bold added for emphasis):

- Control: Are you measuring your tests accurately? Measuring statistical significance of your test

- Variation: FAQ: How long should my test run?

The variation using a first-person term easily won, with a 33.9% higher conversion rate (measuring clicks).

That’s clearly just one test, and far from conclusive, but it shows that writing your subject lines with a different perspective can make a big difference.

So, with that in mind, try experimenting between first-person and second person terms.

Here are some first-person words to try:

- I

- We

- Us

- My

- Our

- Me

And here are some (admittedly limited) second person words to try:

- You

- Your

- Yours

Action Item: Using first-person language may help your reader connect more with your subject line. Split test two similar emails where one uses first-person language, and the other uses second person language.

For example:

- How should I split test my emails?

- How should you split test your emails?

3. Urgency vs. No Urgency

Robert Cialdini included urgency and scarcity as two of the “weapons of influence” in his book, Influence.

Urgency describes any situation where there is a time limitation, while scarcity indicates a limited amount of supply.

In a 2012 study about mobile email trends, Chief Marketer found that email subject lines with a sense of urgency had 22% higher open rates.

Other research since then has confirmed that urgency usually has positive effects on open rates.

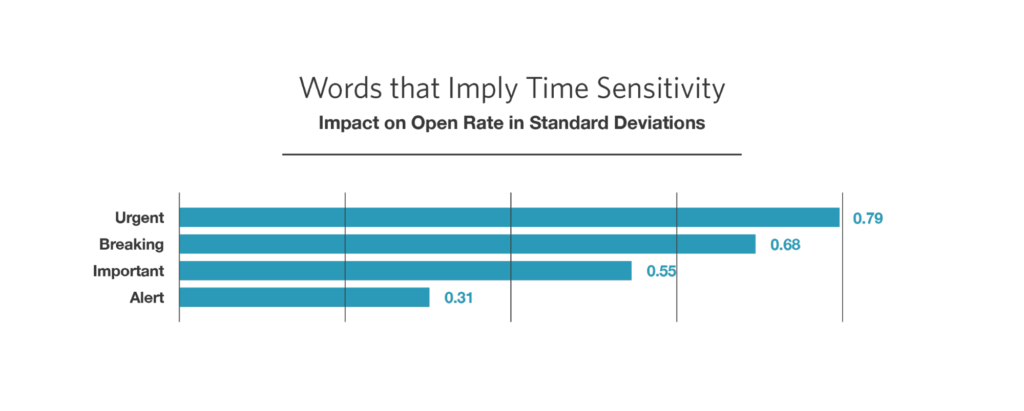

In MailChimp’s study, they looked at emails that used the following words:

- Urgent

- Breaking

- Important

- Alert

All four of those words imply time sensitivity and produce urgency.

All four of those words significantly improved open rates. The best two were “urgent” and “breaking”.

This was also a test that Expedia (the travel company) looked at. They found that emails with the word “tomorrow” in the subject line were opened 10% more.

Here are some words and phrases you can think about including in your subject lines to trigger urgency:

- Today only

- Expires tomorrow

- [Urgent]

- One day left

- Only [#] left

Of course, not every email subject line can trigger urgency. So use with caution.

Action Item: Look for opportunities where you can add urgency triggers to your subject line. Then use them.

For example:

- Download our split testing eBook for free

- Download our split testing eBook for free [today only]

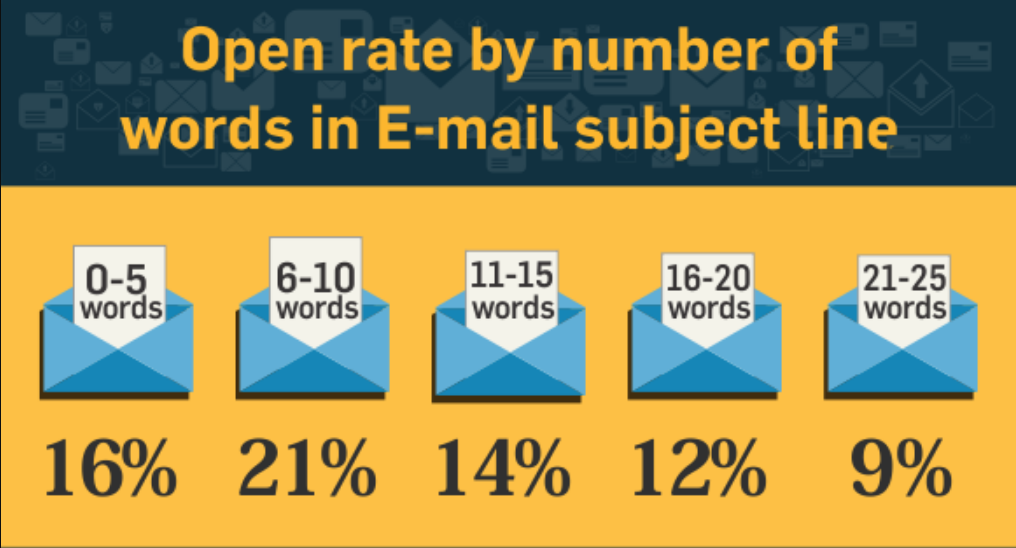

4. Long Subject Lines vs. Short Subject Lines

We all know that the length of an email subject line will affect open rates.

The sweet spot is right around 6-10 words in most cases.

There are a few reasons why that longer subject lines have lower open rates:

- Display issues on different devices and browsers.

- People skim their emails and long subject lines dilute the main subject.

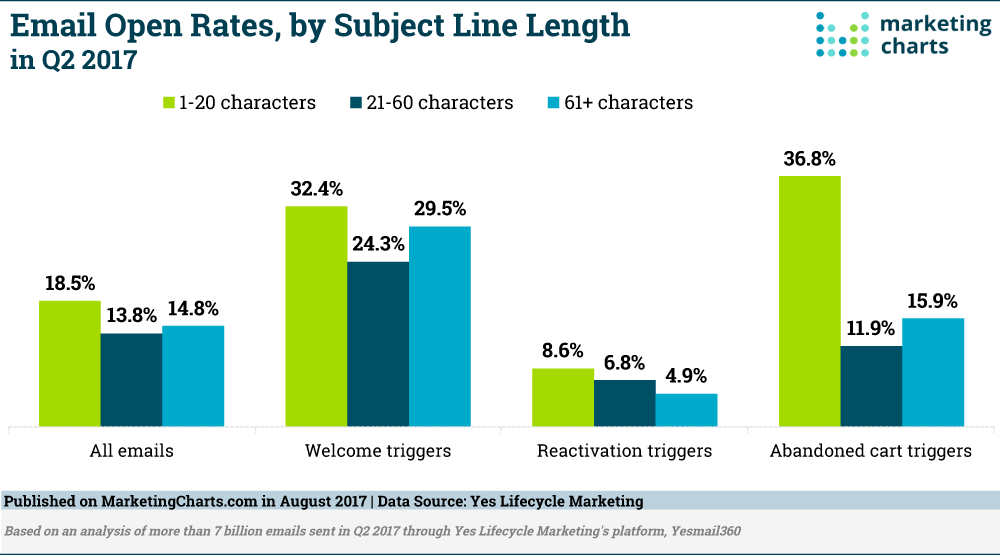

In 2017, Yes Lifecycle Marketing released an analysis of email open rates by subject length.

They found that no matter what type of email they looked at, email subject lines that were between 1 and 20 characters performed the best.

The average English word is 4.5 characters, meaning that subject lines that were between 4-6 words did best.

The research also showed that when you go past 20 characters, sometimes it’s better to have an extra long subject line (61+ characters). It depended on the type of email.

Action Item: There’s no concrete answer about what length is always the best. Try out subject lines that are significantly different lengths, but try to keep the message as similar as possible.

For example:

- The 14 Best Ways to Split Test Email Subject Lines

- The 14 Best Subject Line Split Tests

5. Ambiguous Benefits vs. Specific Benefits

Whenever I write a blog post, there’s always one feature that I personally think is the “best.”

But just because you think it’s the best for your audience, doesn’t mean they do as well.

Optimizely did an interesting subject line split test that looked at this.

Compare their original subject line to the challenger:

- Original: “Get Optimizely Certified for 50% Off”

- Challenger: “Get Optimizely Certified & Advance Your Career”

There are two different benefits being tested here.

The first, and more obvious, is a price discount.

The second is career advancement.

I would have guessed that the 50% off email would have a higher open rate, but it turned out that the challenger had 13.3% more opens.

The audience cared more about career advancement than money.

Action Item: If you’re promoting a particularly in-depth piece of content or product, there are likely many benefits to your audience. Try split testing different benefits in your subject lines, but keep the rest of the subject line the same (like in the example above).

6. Content Type Specified vs. No Content Type Specified

A strange thing I’ve noticed as a marketer for years is that readers really value certain formats of content.

A PDF file is seen by most readers as more valuable than a blog post on a web page, even if it’s the exact same content.

Sure, a PDF is more convenient sometimes, but it’s also less convenient sometimes. Regardless, readers place a lot of extra value on it.

The same can be said about eBooks, MP3 narrations, and infographics.

Optimizely tested two identical subject lines, but added a format to the beginning of one (bold added for emphasis):

- Original: “Why You’re Crazy to Spend on SEM But Not A/B Testing”

- Challenger: “[eBook] Why You’re Crazy to Spend on SEM But Not A/B Testing”

The challenger received 30% more clicks.

Not bad, right?

Action Item Knowing there’s something valuable inside (e.g. a PDF, eBook, MP3, Infographic) will give extra incentive for readers to open your email. Split test two identical versions of a subject line (like above), but add a format in square brackets to one of them.

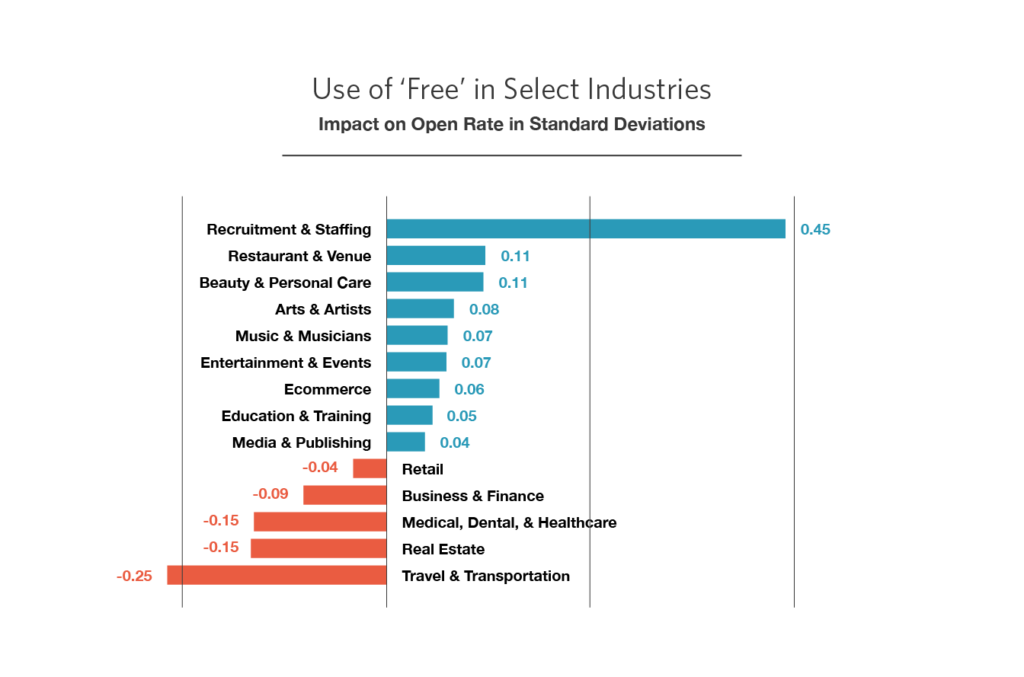

7. Free vs. Synonyms

Everyone likes “free,” right?

Well, not necessarily when it comes to emails.

On the positive side, Sidekick found that the word “free” in a subject line increase open rates by 10%.

MailChimp’s study also looked at the effectiveness of “free.”

They found that it made a significant positive difference in certain industries. In others, using the word “free” actually decreased open rates.

MailChimp did one particularly interesting comparison.

The word “freebie” was 13 times as effective as “free” at increasing open rates (across all industries).

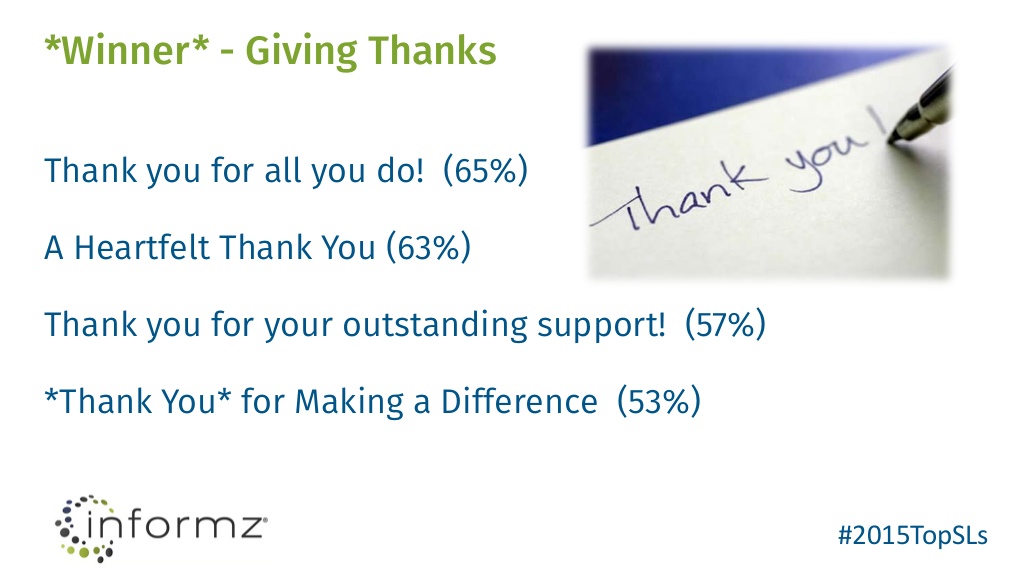

Informz did a test comparing these two subject lines (bold added for emphasis):

- Subject line A: “Complimentary Online CME Courses”

- Subject line B: “Free Online CME Courses”

Subject line A had 3% more opens, a small, but significant result.

So, if you split test this, here are some other variations of “free” that are worth testing:

- On the house

- Comp

- Freebie

- Handout

- No charge

Action Item: People like free in certain industries (usually the ones not known for scams and trickery), but not in others. Check for yourself by split testing two similar subject lines, but add in “free” to one.

For example:

- Guide to subject line split testing

- Free Guide to subject line split testing

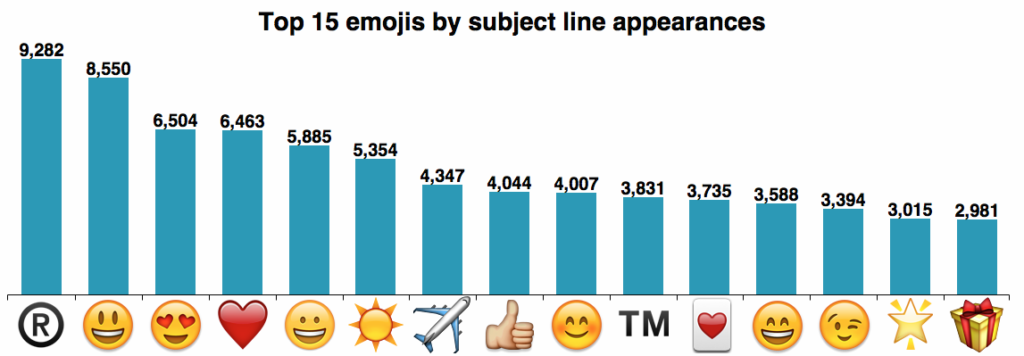

8. Emojis vs. No Emojis

If you’re dealing with a particularly young audience, emojis are a bigger part of their daily lives than you might think.

Most email marketing tools support adding emojis to subject lines. Here are the most popular ones:

They’re small symbols that attract a lot of attention (good or bad) when contrasted with typical email subject lines.

In 2012, Experian found that emails that contained an emoji had 56% higher email open rates.

That was the first big research that sparked further testing by other people and businesses.

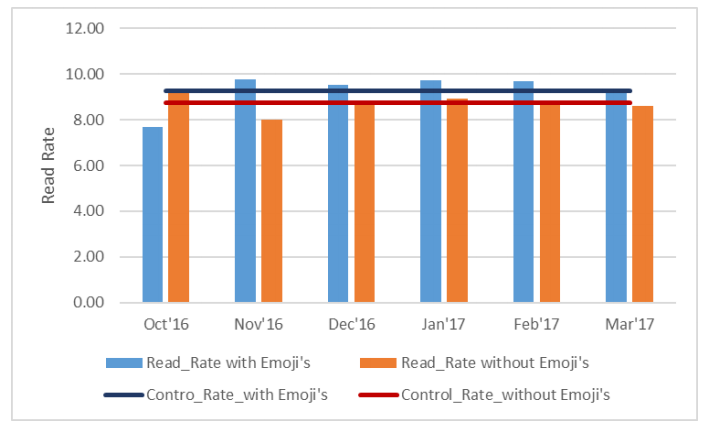

First, Expedia found that their overall email read rates with emojis went up (nearly 25% in some months).

Creative consultancy agency YellowBall also tested emojis, hoping to find similar results.

Unfortunately, the results weren’t too great.

They actually found that their emails with emojis were opened 2% less than without.

It was far from a perfect test, but still disappointing.

The results you see from split testing emojis is likely to depend heavily on your industry and audience demographics. So, make sure you know who you’re serving.

Action Item: Try adding an emoji somewhere in the subject line and split testing it against a plain version.

For example:

- 14 Ways to Split Test Your Subject Lines

- 14 Ways to Split Test Your Subject Lines 💰

9. Sentence Case vs. Title Case

The two main types of capitalization styles that we use for titles and email subject lines are:

- Sentence Case: A great email subject line

- Title Case: A Great Email Subject Line

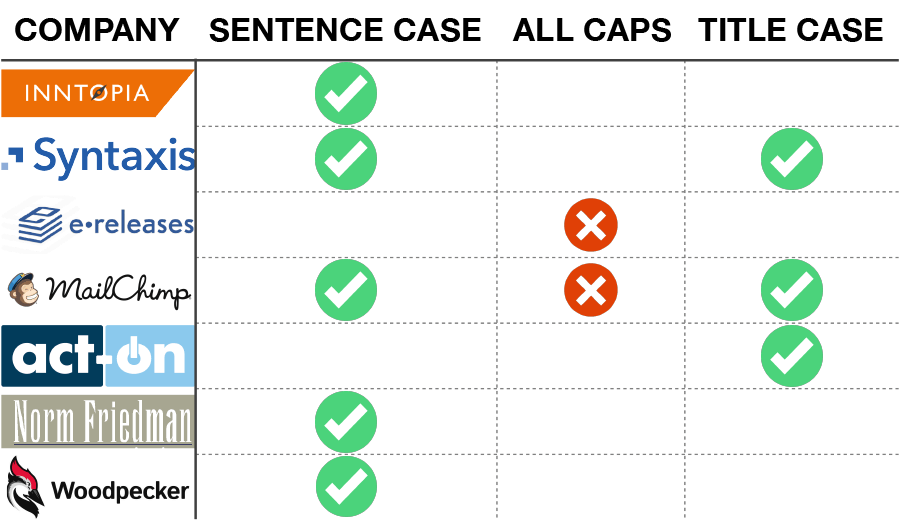

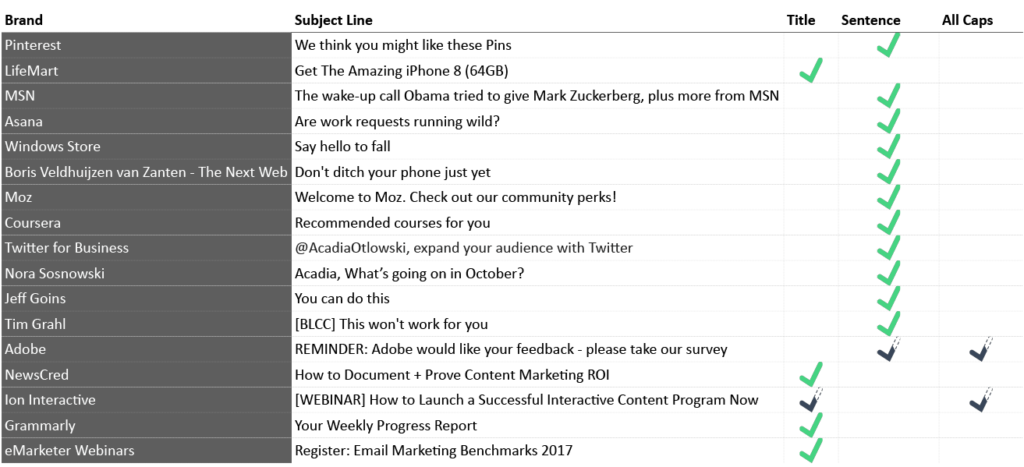

Acadia Otlowski, on behalf of HiP, did some research to try to find out if one is better than the other.

First, she noted what large companies recommended publicly:

Most recommend using sentence cases in email subject lines, but about half also recommended using title case.

Next up was collecting actual emails from big brands and seeing what capitalization method they used:

Again, most used sentence case, but there was still a significant amount of title case subject lines.

The only real takeaway is that neither is clearly better than the other.

But the capitalization you choose to use will make a difference in your open rates. So it’s worth testing.

Keep in mind that one style may be better than the other for certain emails, but worse for other emails. Don’t just test this once and think the results apply to all your subject lines equally. They don’t.

Action Item: Try split testing 2 identical email subject lines, but do one in sentence case, and another in title case. This is a test you should try for different types of content. See if subscribers respond to one type better in all situations, or just some.

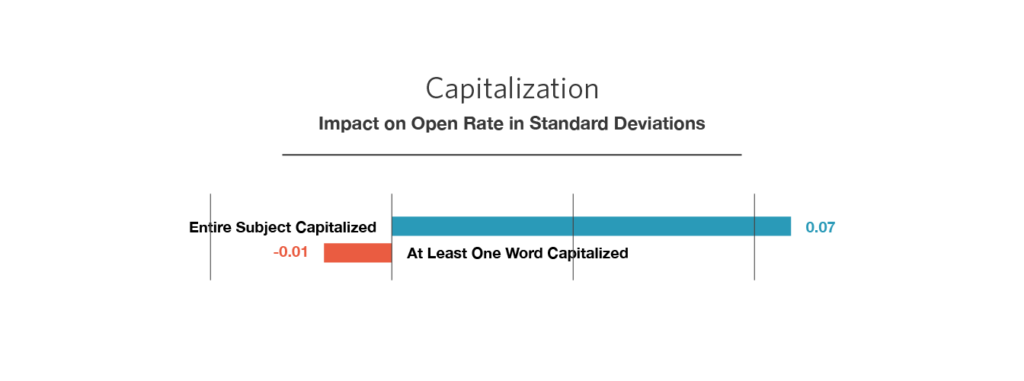

10. Capitalization vs. No Capitalization

The one capitalization style I didn’t include above was using all capital letters (e.g. “A GREAT EMAIL SUBJECT LINE”).

It can come off as spammy, but it can also help an email stand out from others in an inbox.

MailChimp found that having the entire subject line capitalized produced a small, but significant increase in open rate:

As an interesting side note, they also found that capitalizing one or more words (but not the whole subject line) produced a small decrease in open rate.

Action Item: Capitalization can help your subject lines stand out in email boxes. Split test identical subject lines, but do one in all caps. Alternatively, do a split test but only capitalize 1 word.

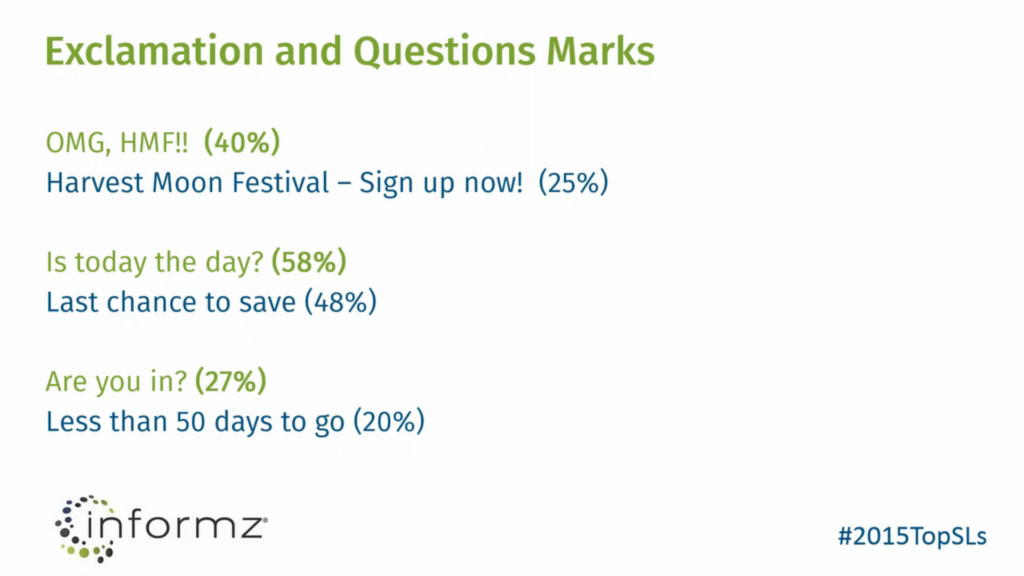

11. Exclamation Marks vs. No Exclamation Marks

Punctuation is another way to add some emotion and connection to plain text.

Informz looked at over 5,000 subject lines to find if punctuation has a significant effect on open rates.

It wasn’t a perfectly controlled test, but in general, they found that more punctuation led to better open rates.

They mainly looked at exclamation points and question marks.

SmartInsights also looked at the effect of punctuation marks in subject lines.

They found that question marks actually deceased open rates by 8.1% on average.

Again, this shows that punctuation can have a significant effect on your email open rates (positive or negative), and is situational.

Action Item Exclamation marks appear to have the most promise with increasing email open rates. Split test different variations of subject lines, but end one with an exclamation mark (or multiple).

For example:

- The 14 Best Email Subject Line Split Tests

- The 14 Best Email Subject Line Split Tests!

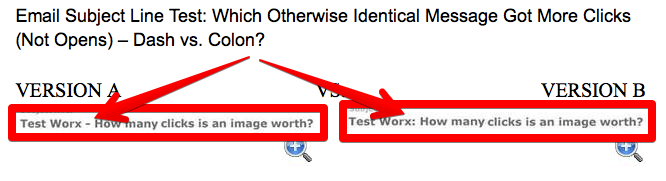

12. Hyphens vs. Colons

Is it worth split testing less meaningful punctuation like hyphens and colons?

In one test, using a hyphen in place of a colon in the exact same subject line resulted in open rates improving by 3%.

It’s not likely to make a huge difference.

But for a high-value email, a 3% open rate increase can be extremely valuable and worth testing.

This is something you should selectively split test, only on emails where a few percent difference could make a big difference in revenue.

Action Item: If you’re using either a colon or hyphen in an important subject line, split test it against the other to see if it makes a difference.

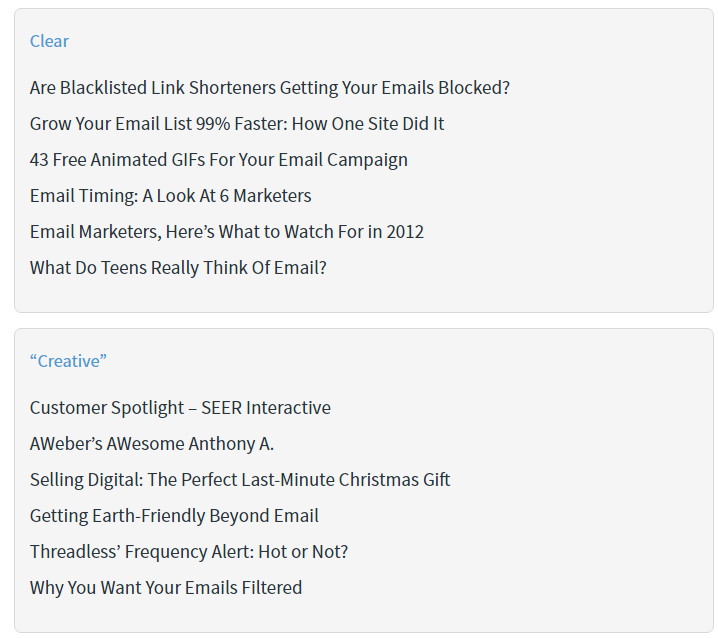

13. Ambiguous Copy vs. Specific Copy

In copywriting, it’s usually best to be clear, rather than clever.

Intuitively, it applies to writing email subject lines as well.

Most people are just trying to go through their emails as quickly as possible, and want subject lines to be clear descriptions of what’s inside.

This is a difficult thing to test, but AWeber gave it a try.

They picked a selection of “clear” emails and looked at how they performed compared to a selection of “creative” emails.

Overall, the clear emails performed better by 541%.

That’s not to say that clever emails can’t be better, but if you think you have a clever subject line that’s a bit ambiguous, split test it against a specific, clear alternative.

Action Item: People usually prefer clarity in subject lines, but some mystery may attract extra clicks.

It’s tough to test ambiguity and specificity, but you can still test both writing styles if you’re willing to try it out in multiple scenarios. A big enough sample size of tests will make the results clearer.

Here is an example of a split test you could run:

- 14 Data-backed subject line split test ideas

- Want a better email open rate? 14 Clever ways to get one…

Same topic, but the first subject line is much clearer and to the point.

14. Gratitude vs. No Gratitude

When Informz analyzed 5,000 emails, they noticed that one type of email had an incredible open rate.

Those emails all included the phrase “thank you.”

Almost all emails want something from the recipient, so it’s intuitive that an email thanking a person would be received favorably.

Action Item: You won’t always be able to thank a reader in the subject line, but when it can be included naturally, it’s one of the first things you should split test.

Conclusion

Many of the subject line A/B testing ideas I covered can have a significant impact on your email open rates.

But we also saw that many of them depend on your industry and audience demographics.

That’s why it’s important to test them for yourself and measure the results. Not just the open rates, but conversions as well.

.png?width=600&height=315&name=email_subject_line_best_practices-BLOG%20(1).png)